- X (Twitter) is explicit that information you share may be used to train its AI models (Grok), with update notes dated Oct 16, 2024 (effective Nov 15, 2024); EU regulators have separately pushed back on some uses. privacy.x.com+1

- Meta (Facebook/Instagram) uses public content to train generative AI in many regions and, as of April 2025, announced it will resume using public EU content with an objection/opt-out form for EU users. Its AI features also sit under separate Meta AI terms/policies. AP News+1

- TikTok documents face & voice information processing for features and publishes policies about AI-generated content; in some official policies (e.g., TikTok Shop SEA) the company explicitly says data is used to “train and improve… machine learning models and algorithms.” TikTok Support+2TikTok Support+2

- Pandora (SiriusXM) describes broad data uses for service, analytics and advertising in its July 14, 2025 privacy policy; it does not plainly claim consumer uploads are used to train general AI, though SiriusXM’s filings flag AI-related privacy/security risks at a corporate level. Pandora+1

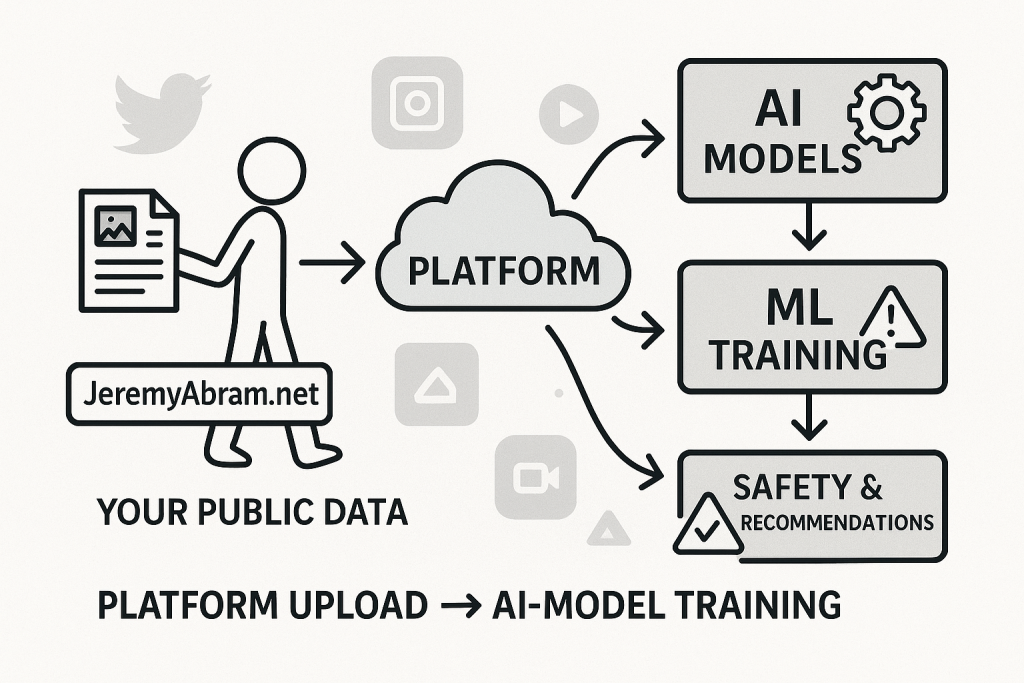

1) What “AI training” typically means on social platforms

When policies say your information is used to “train,” “improve,” or “develop” AI, they usually mean:

- Supervised/weakly supervised training: using public posts, comments, images, videos (and sometimes engagement signals) as examples to teach models to predict or classify.

- Reinforcement/feedback loops: using interactions with chat assistants (e.g., Meta AI, Grok) to improve answers.

- Feature-specific ML: e.g., vision effects, spam/abuse detection, recommendations, safety classifiers—sometimes documented separately from “generative AI.”

Policies vary by region and product. The same company may have different language for: main social apps vs. AI assistants vs. commerce features vs. creator tools.

2) Platform by platform: what the documents actually say

X (Twitter → “X”)

- Policy update (Oct 16, 2024): X added “AI and machine learning clarifications”—explicitly stating it may use information you share to train AI models (generative or otherwise), with a pointer to Grok controls. Effective Nov 15, 2024. privacy.x.com

- EU constraints: Following a request from Ireland’s DPC, X agreed not to use some EU user data to train AI until an opt-out consent path exists. Reuters

- Operational transparency: X’s DSA transparency notes that content/moderation ML is trained on platform content and human-labeled cases. (This is separate from Grok but shows routine ML on posts.) transparency.x.com+1

Implication: If you post on X, assume your public content and interactions can help train both safety/recommendation systems and Grok, subject to regional limits and settings. privacy.x.com

Meta (Facebook & Instagram)

- Generative AI training on public content: In April 2025, Meta said it would resume using public EU content (adult users’ posts/comments) to train AI, with notifications and an objection form. This aligns with how Meta uses public content elsewhere. AP News

- Where to look: Meta’s core Privacy Policy describes how it collects/uses info; Meta also has AI-specific terms/pages (e.g., “Meta AIs” terms) that may apply when you use those features—even if the public explainer page is login-gated. Facebook

Implication: Your public posts/comments can be used to train Meta’s gen-AI (regionally with opt-outs in the EU). Interactions with Meta AI may also be used to improve models. Private messages are excluded per reporting on the EU plan. AP News

TikTok

- Biometric-adjacent processing (feature-level): TikTok’s Help Center explains how it processes face & voice information in videos/photos/LIVE to power effects, moderation, accessibility, etc. That is on-platform ML tied to features. TikTok Support

- AI content labeling: TikTok details AIGC labeling (creator labels, auto-labels via C2PA metadata) and rules for edited/synthetic media. This governs how AI content is disclosed, not just how data is trained. TikTok Support

- Explicit “train & improve” language (example): In official TikTok Shop (SEA) policy, TikTok says it uses information “to train and improve our technology, such as our machine learning models and algorithms.” (Policies differ by product/region, but this shows the company’s phrasing.) seller-my.tiktok.com

Implication: Expect heavy ML use for recommendations/safety/vision features and, in some products, policy language that includes training models and algorithms. Exact opt-outs vary by region/product.

Pandora (SiriusXM)

- Privacy policy (effective July 14, 2025): Details broad collection/use/sharing for service delivery, analytics, and advertising; gives state-law rights. It does not plainly state a practice of using user media to train general AI. Pandora

- Corporate filings: SiriusXM’s 2024 10-K acknowledges AI-related privacy/security risks at a program level—useful context even if the consumer policy isn’t framed as “training models on your content.” Sirius XM Holdings Inc.

Implication: Risk surface is more about listener/behavioral data and ads/analytics, not platform-scale gen-AI trained on your uploads.

3) What are the benefits platforms claim?

- Better safety & moderation: ML finds spam, impersonation, hate/violence, deepfakes faster than human-only review. X’s transparency reports explicitly describe training content safety models on platform cases. transparency.x.com

- Higher-quality recommendations: All four rely heavily on ML to match content to interests; training on real usage improves ranking, relevance, and discovery. (This is TikTok’s hallmark.) Mage AI

- Product improvements & new features: Generative features (chat assistants, creative effects, auto-captions, translation) typically improve when trained on real interactions. Meta and X both frame AI training as necessary to improve their assistants (Meta AI, Grok). AP News+1

4) Concrete risks to weigh

- Scope creep on “public” content

Training on public posts/comments can still encode identifiable context (faces, places, usernames) and be hard to meaningfully opt out of outside the EU. Meta’s EU stance includes an objection form; the U.S. lacks a similar universal right. AP News+1 - Blended training sources & third parties

Even when a company offers an in-product toggle, models may also ingest other public internet data or partner data. That blurs provenance and complicates deletion rights for training copies. (Regulatory interventions in the EU show the friction.) Reuters - Sensitive signals in media

Vision systems can infer faces, age categories, locations, affiliations from images/video. TikTok documents face/voice processing; similar capabilities exist across platforms for safety/recommendation—even if policies don’t call it “biometrics.” TikTok Support - Limited post-hoc control

Opting out (where available) typically stops future training but doesn’t guarantee removal from already-trained model weights. (This is an industry-wide limitation that regulators are still grappling with.) Reporting shows uneven, region-specific relief. AP News - Cross-product/region variability

TikTok’s Shop privacy language shows concrete “train & improve” phrasing for some surfaces; main-app policies may be phrased differently or blocked by robots for casual readers—meaning your rights and wording vary by which TikTok property and where you live. seller-my.tiktok.com

5) Side-by-side: AI-training posture (snapshot, late-2024/2025)

| Platform | Does policy explicitly mention training on your data? | Scope & Notes | Region-specific limits/controls |

|---|---|---|---|

| X (Twitter) | Yes—policy update clarifies AI model training on information you share (Grok). | Also trains safety/recommendation ML on platform content. | EU: agreed not to use some EU data until opt-out consent path exists. privacy.x.com+2transparency.x.com+2 |

| Meta (FB/IG) | Yes—uses public content to train generative AI; resumed EU training Apr 2025 with objections honored. | Interactions with Meta AI also used to improve models; private messages excluded per AP report. | EU: objection form process; outside EU, rights are narrower. AP News |

| TikTok | Sometimes explicit—e.g., TikTok Shop SEA: “train and improve… machine learning models.” Main app also documents face/voice processing and AIGC labeling. | Heavy ML for recommendations, safety, effects; labeling for AI-generated media. | Controls vary; no single global “opt-out of training” switch publicly documented. seller-my.tiktok.com+2TikTok Support+2 |

| Pandora (SiriusXM) | No clear training claim in consumer policy; broad uses for service/analytics/ads. | Lower “media-AI” risk vs. visual-first platforms; focus on listener/behavioral data. | State-law privacy rights (access, delete, opt-out of certain sharing). Pandora |

6) Your levers: what you can actually do (by platform)

- X (Twitter): Review Privacy & Safety → Grok settings and disable training where offered; remember EU users have stronger constraints at present. privacy.x.com+1

- Meta (FB/IG): In the EU, use the objection form when notified; elsewhere, audit your public vs. private sharing and consider shrinking your public footprint if training bothers you. AP News

- TikTok:

- Manage Downloads/Duet/Stitch and audience on posts.

- Understand that features may process face/voice; avoid effects that require face landmarks if you’re uncomfortable.

- Follow TikTok’s AIGC labeling policies (and watch for auto-labels). TikTok Support+1

- Pandora: Exercise state privacy rights (access/delete/limit sharing) via Pandora/SiriusXM’s privacy pages. Pandora

7) Strategic risk-reduction (expert checklist)

- Assume public = trainable. Keep truly sensitive media private or off-platform. Where possible, limit audience to friends/close before posting. (Relevant for Meta/X/TikTok.) AP News+1

- Control assistant interactions. If you use Grok or Meta AI, treat prompts as potentially trainable inputs, then delete histories or toggle training settings where available. privacy.x.com

- Minimize biometric-like signals. Skip face-tracking effects and close-ups if concerned; TikTok explains face/voice info processing explicitly. TikTok Support

- Use regional rights. In the EU/UK, leverage objection/consent tools; outside these regions, focus on audience controls and deletion of content/interactions. AP News+1

- Separate work from personal. Creators/brands should segregate public promo from sensitive/private assets (e.g., client photos with EXIF) to avoid inadvertently contributing to training data.

- Check product-specific policies. TikTok’s Shop language shows how training clauses can appear in adjacent products even if the main app’s policy words it differently. seller-my.tiktok.com

8) The bigger picture: why the rules differ (and keep changing)

- Regulatory asymmetry: The EU (GDPR/DSA) is actively forcing platforms to add consent/objection routes for AI training; U.S. users often lack equivalent levers. X paused certain EU uses; Meta rolled out an EU objection mechanism. Reuters+1

- Operational opacity: Even with toggles, trained models are composite artifacts; removing specific data from already-trained models remains technically and legally complex—one reason most controls are forward-looking. (Industry-wide reality reflected in coverage and platform notices.) AP News

- Platform economics: The business case for recommendations, safety, and new AI features drives continuous training on real user interactions—hence the broad “improve our services/models” language you see across policies. transparency.x.com

Sources (primary, recent)

- X (Twitter): Privacy/ToS update (AI model training clarifications), Oct 16, 2024; DSA transparency on ML training data; EU limitation coverage. privacy.x.com+2transparency.x.com+2

- Meta (FB/IG): Core Privacy Policy; Apr 2025 EU announcement—resume training on public EU content with objection form. Facebook+1

- TikTok: Help Center—face & voice information processing; AI-generated content labeling; TikTok Shop SEA policy with “train and improve… machine learning models.” TikTok Support+2TikTok Support+2

- Pandora (SiriusXM): Privacy Policy effective July 14, 2025; SiriusXM 10-K (Feb 1, 2024) noting AI-related risks. Pandora+1

Leave a Reply