From Touchscreens to Touchless Minds: Why the Human Body Is Becoming the Ultimate Operating System

For decades, we interacted with technology through external tools: keyboards, mice, touchscreens, voice assistants. Each generation of interface shortened the cognitive distance between human intent and digital action.

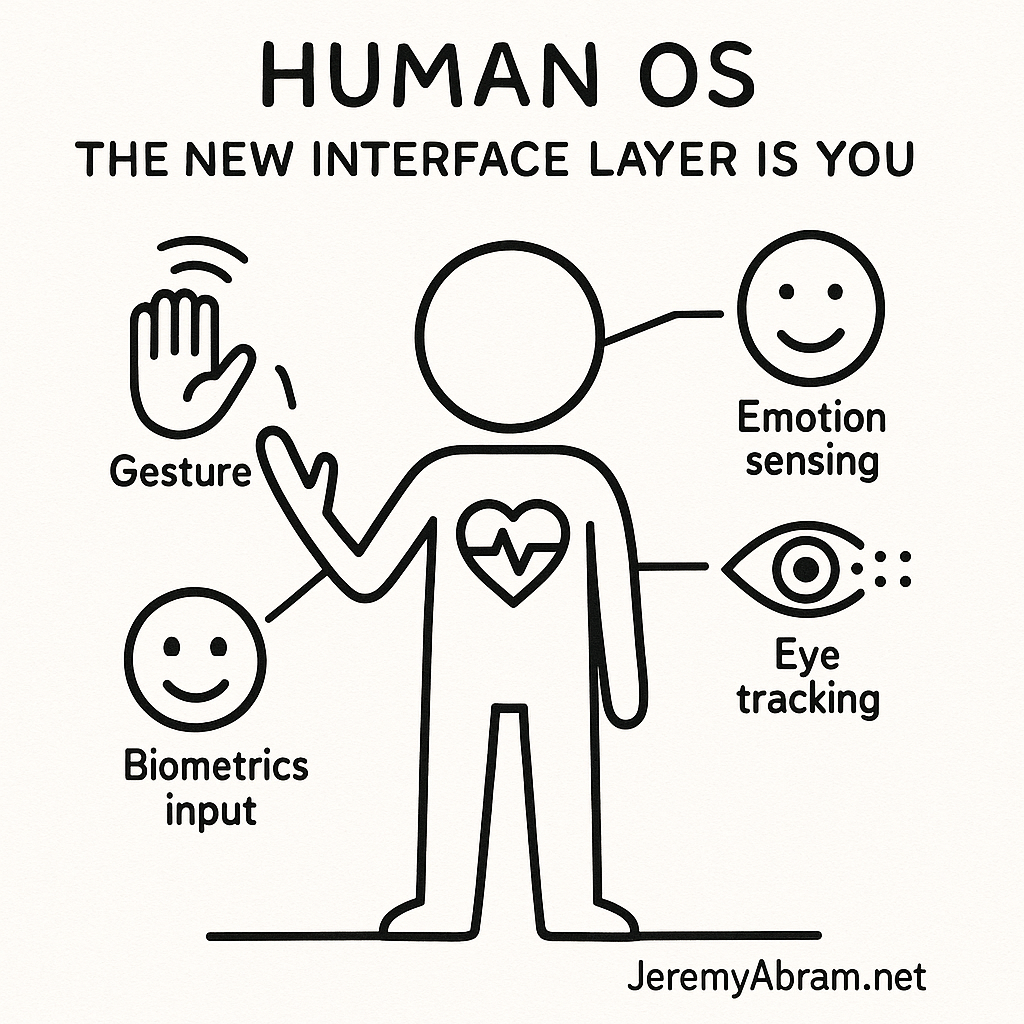

Now, a new evolution is emerging — not a device, but a design philosophy:

The human being is becoming the interface.

Biometric signals, emotional states, and subtle gestures are moving from passive data to active input, forming what technologists call Human OS — a layer where your body and mind operate as the controller.

This shift represents the most intimate human-machine integration since computing began.

From Device Interaction to Self-Interaction

| Past Interface Era | Core Input | Distance from Human Intent |

|---|---|---|

| Physical Buttons | Mechanical touch | High |

| Keyboard & Mouse | Hand-driven precision | Moderate |

| Touchscreens | Direct physical touch | Low |

| Voice Assistants | Natural speech | Lower |

| Human OS | Body, emotion, biometrics | None |

The history of computing has been about reduction — reducing latency, friction, translation. Human OS eliminates the interface barrier altogether.

You do not go to technology. It comes through you.

Key Components of Human OS

1. Gesture-Based Control

Motion as command is no longer science fiction. Cameras, IR depth sensors, radar arrays, and muscle-signal readers allow systems to interpret:

- Hand waves, finger taps in mid-air

- Wrist rotations for scrolling

- Muscle twitches to execute commands

- Facial micro-expressions for intention mapping

- Eye tracking for cursor control

Apple’s Vision Pro, Meta’s neural wristband research, and Tesla’s in-vehicle gesture trials are all evidence of the shift.

Gesture isn’t input — it’s intention embodied.

2. Emotion-Aware Interfaces

Emotion recognition tech is accelerating, powered by AI models that analyze:

- Voice tone and cadence

- Heart rate variability

- Breathing patterns

- Facial tension

- Micro-expressions

- Galvanic skin response

If you are stressed, the system adjusts notifications.

If frustrated, UI complexity reduces.

If bored, learning systems escalate challenge.

If excited, gaming reacts dynamically.

The interface becomes empathetic, responding to psychological state rather than taps and clicks.

3. Biometric & Neural Input

Biometrics have moved from security to interaction logic:

| Biometric | Interface Use |

|---|---|

| Heart rhythm | Authentication + emotional state modeling |

| Pupil dilation | Engagement + content adaptation |

| EEG signals | Direct neural commands |

| Muscle electrical activity | Fine-grained motion control |

| Blood oxygen + HRV | Health-aware notification pacing |

This is not simply sensing the human — it is collaborating with the human.

We move from I click → I am, and the interface responds.

4. The Rise of Passive Input

Traditional computing depends on active user behavior.

Human OS thrives on ambient intent.

Examples:

- Smart home adjusts based on stress levels

- AR displays update when gaze shifts

- Wearables anticipate actions based on pattern

- Cars detect driver micro-fatigue and intervene

- Fitness systems adapt in real-time to physiology

Your body becomes an always-on signal system.

The interface no longer waits — it anticipates.

Why This Shift Was Inevitable

Three forces converged:

AI Perception

Modern AI can interpret emotion, voice tone, posture, attention, and stress with increasing accuracy.

Sensor Expansion

Smart devices now track the nervous system, eyes, muscles, and cardiovascular patterns — in real time.

Cognitive Economics

Humans prefer interfaces requiring least cognitive load.

Touchscreen → voice → no action beyond being.

When your body speaks, machines listen.

Benefits: A New Kind of User Experience

1. Frictionless Control

Technology feels invisible — actions emerge as naturally as breathing or walking.

2. Adaptive Environments

Lighting, notifications, digital noise — tuned to internal state.

3. Inclusive Interaction

People with mobility or speech limitations gain greater control.

4. Cognitive Augmentation

Technology reinforces attention, wellbeing, and memory based on real needs.

Human OS doesn’t just respond — it supports.

Risks & Ethical Frontiers

Where humans become the interface, stakes rise.

| Concern | Why It Matters |

|---|---|

| Emotional privacy | Your feelings become machine readable |

| Behavior prediction | Predictive systems risk manipulation |

| Autonomy erosion | Interfaces act before you choose |

| Security | Biometric data cannot be changed |

| Psychological dependency | Comfort can become compliance |

The more seamless the interface, the more invisible its influence.

Invisible UX can mean invisible control.

Design Principles for a Human-OS Future

To build responsibly:

- Consent as default, not afterthought

- Emotional data firewalls

- User override authority — always

- Context-aware, not context-dominant systems

- Human agency over algorithmic anticipation

- Clear emotional transparency indicators

The goal is not machine control of humans —

but machine alignment with human intention.

The Operating System Was Never the Device — It Was Us

We are entering an era where:

- The body is the cursor

- Emotion is the command

- Attention is the navigation field

- Identity is the security key

- Intention is the language

Human OS marks the moment technology stops being a tool we use and becomes a space we inhabit — shaped by who we are, not what we press.

In this future, the interface disappears.

You are the interface.

And the question becomes:

How much of yourself are you willing to let technology read?

The next UX revolution isn’t touch or voice.

It’s presence.

Closing Thought

Human OS promises a world where technology finally learns to speak human.

But if we are not vigilant, it may also learn to shape human.

The future interface is the self —

and the greatest design challenge is ensuring

the self stays sovereign.

Leave a Reply