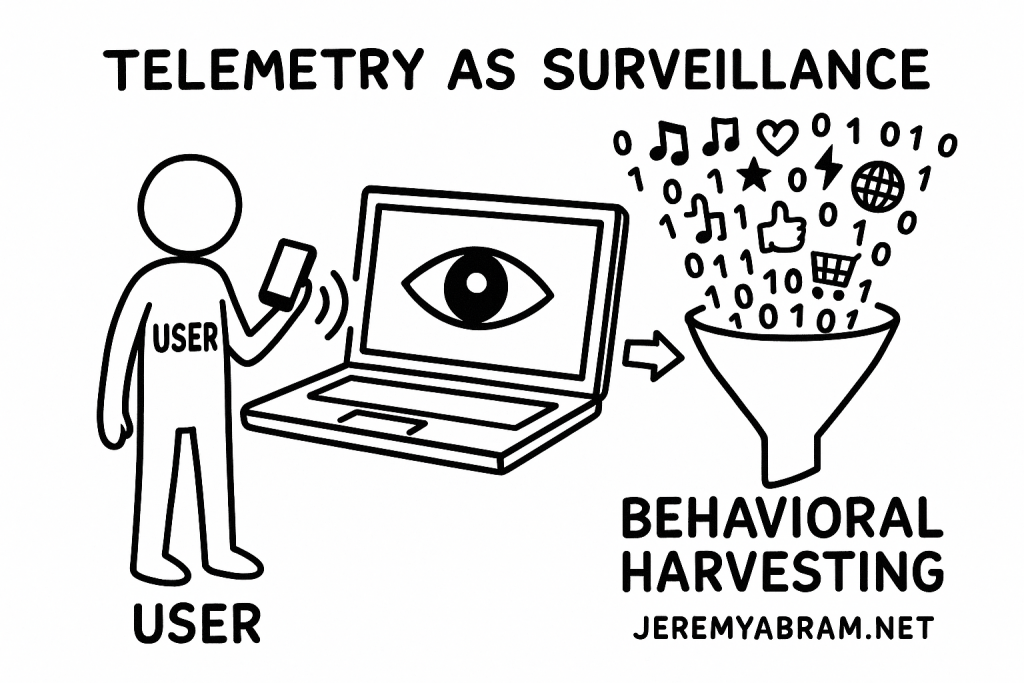

When “performance improvement” is really behavioral harvesting

In modern software, one phrase has become so common it fades into digital wallpaper:

“We collect diagnostic data to improve performance.”

It sounds harmless. Helpful, even. Who doesn’t want faster apps and smarter systems?

But the truth beneath this corporate euphemism is far more complex:

Telemetry isn’t a side-effect of modern software.

Telemetry is the business model.

We live in an economy where behavioral data — not the software — is the product. “Performance improvement” often means predicting, shaping, and monetizing user behavior, not fixing bugs.

And most consumers never realize it’s happening.

The Quiet Shift: Software as Surveillance

Years ago, software was a product. You bought it, installed it, and used it. It ran on your machine and stayed there.

Then the cloud arrived — and with it, a new currency: behavior.

Companies realized that real value didn’t come from selling software — it came from studying the people who used it:

| Old Model | New Model |

|---|---|

| Sell software | Give or sell software to harvest data |

| Profit from product | Profit from behavioral analytics |

| Improve code | Improve influence and retention |

Telemetry began as diagnostics — crash reports, system performance metrics — a reasonable and even necessary practice. But telemetry evolved into something else:

User insight → behavior prediction → behavior control → revenue

Once companies saw how profitable behavioral data could be, telemetry changed from optional to foundational:

- Browsers track your clicks and reading time

- Phones log your gestures and movement patterns

- Smart TVs record viewing habits — even microphone audio

- Apps monitor scrolling behavior, tap frequency, idle time

- Cars now collect driving patterns, location trails, voice requests

Today, anything that can be measured will be measured.

“Opt Out” — The Illusion of Consent

Platforms claim users can opt out of tracking. But the mechanics tell a different story:

- “Diagnostic data” that can’t be disabled

- Opt-outs hidden behind menus, legal jargon, or friction

- Consent banners designed to nudge compliance

- Features disabled unless telemetry remains enabled

- “Anonymous” data that can be easily re-identified

- Forced software updates that re-enable tracking by default

These patterns are not accidental. They follow principles known from persuasive design and dark UX patterns — tactics engineered to guide behavior.

User choice exists — theoretically.

In practice? You choose what the system wants, or you lose functionality.

That’s not consent — that’s coercive architecture.

From Bugs to Behavior Modification

Telemetry systems don’t just observe. They learn and react.

A/B testing and machine learning systems feed on user telemetry, training algorithms to maximize:

- Engagement

- Click-through rates

- Screen time

- Subscription retention

- Emotional triggers and responses

- Conversion to purchases or ads

Metrics define the experience — not creativity, not ethics, not user wellbeing.

If a platform depends on your attention, telemetry becomes a psychological engine.

You are not just being measured; you are being steered.

The Rise of “Experience Engines”

Telemetry gives companies a lens into digital life:

- What annoys you

- What excites you

- What slows you down

- What delays your dopamine hit

- What pushes you to upgrade, subscribe, share, rage, return

These systems continuously refine human-machine interaction loops.

What began as telemetry has grown into:

- Behavioral economics in software

- AI-driven reinforcement systems

- Emotion and sentiment prediction

- Personalized persuasion pipelines

Where products once served users, users now serve product metrics.

Why Privacy Law Can’t Keep Up

Regulations like GDPR and CCPA attempt to address data harvesting. But they’re built for an older problem: data collection.

Modern telemetry isn’t about static data.

It’s about pattern modeling, prediction, and influence.

You can delete your browsing history.

You can’t delete the behavioral fingerprint the system has already learned.

Future regulation will need to address:

- Behavioral analytics limits

- Algorithmic transparency

- Data minimization requirements

- Consent reform

- Psychological manipulation safeguards

Until then, telemetry will continue shaping digital behavior at scale.

Telemetry as a New Human Interface

To understand the modern digital experience, one must recognize:

Telemetry isn’t about software — it’s about people.

It doesn’t just measure what we do. It trains systems to anticipate us, shape us, and profit from us.

That is the defining business model of the digital era.

Not selling software.

Not advertising alone.

But behavioral extraction and prediction.

The invisible trade isn’t code for convenience —

it’s habits for influence.

What Users Can Do

You don’t need to throw your phone in a lake. But awareness leads to leverage:

✅ Use privacy-respecting browsers and search engines

✅ Turn off telemetry where possible — always

✅ Avoid “free” services where behavior is the product

✅ Use firewall rules and DNS blocking

✅ Read permissions like contracts — because they are

✅ Ask a new question:

Who benefits from this data?

The Real Question

Telemetry is not inherently evil.

Diagnostics matter. Performance metrics matter.

The problem is purpose, not data.

When telemetry exists to help users, it is a tool.

When telemetry exists to shape users, it becomes a system of control.

The future of privacy will hinge on answering one crucial question:

Is the software improving for us — or are we being optimized for it?

Leave a Reply